Data Pipelines: Preparing Your Data to Deliver Value

Once your data warehouse and governance framework are in place, the next step is building your data pipelines. Often referred to as Extract, Transform, Load (ETL), this process involves creating automated workflows that move and prepare data for specific business needs.

What Is a Data Pipeline

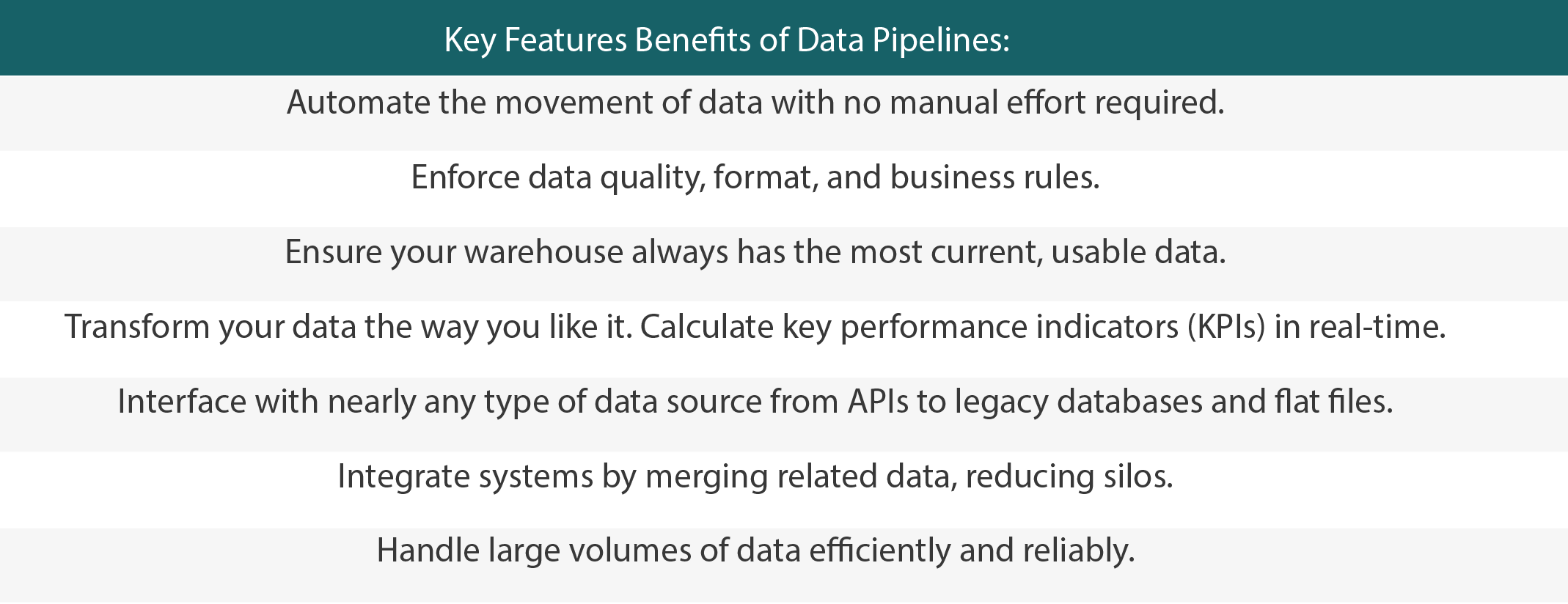

A data pipeline extracts information from your various operational systems, cleans and filters it, combines relevant data sets, and loads the results into your data warehouse for future analysis. Data pipelines are often the first place to apply your data governance rules, helping ensure consistency, quality, and reliability before the data is ever used.

Automate Reporting with Data Pipelines

We often see organizations manually exporting reports, reformatting data in spreadsheets, and adjusting it by hand to meet reporting needs. That might work once or twice, but if it becomes part of your routine, it’s time to consider automation.

With data pipelines, you can automate those tasks on a scheduled basis, reducing manual effort while ensuring consistency. Better yet, pipelines allow you the chance to represent your data in a way that is most meaningful to you. For instance, if you're storing time and expense data for reporting, you might want to flag certain holidays, apply classifications for bookkeeping, or track utilization. Rather than manually doing the work, a well-built data pipeline can handle them automatically and reliably.

Data Pipelines That Do More Than Move Data

While most pipelines focus on collecting, transforming, and storing data, they can also deliver data to external systems such as vendor or business partner integration. A common example of this is Electronic Data Interchange (EDI), where structured data is exchanged between business systems seamlessly.

Data pipelines are one of the two foundational technical components of your data infrastructure. Together with your data warehouse, they help eliminate many of the day-to-day challenges organizations face in managing and leveraging data.

Clark Schaefer Consulting helps organizations design and implement data pipelines that are scalable, reliable, and aligned with business goals. Our team provides guidance through every stage of the process, including strategy, design, implementation, and ongoing support. Contact us to learn how we can help you build a stronger data foundation.